All your data are belong to us

Should you worry about AI training on your data?

In 1989, one of the early video memes to go around the internet was a clip of the Megadrive video game, "All your base are belong to us":

This was a poor translation from a video game where the enemy cyborg had taken over the good guy's bases.

When I hear fears about using AI tools like ChatGPT in a work setting because the AI might learn someone's data, I can't help but think of this meme! It's a fair question and fair concern, but I would argue for most companies, in the coming years, we will be in a spot where companies will PAY for the AI to ingest and train on their data.

Bold statement, I know. Let's dig in.

To set the context, as AI tools like chatGPT become more and more pervasive, we are going to rely more and more on these on these. I already find myself using AI tools more than Google, for instance (see https://www.thoughtfulbits.me/p/my-google-usage-declined-23-after).

For example, today, I asked ChatGPT how to go salmon fishing, including what gear I would need (it is summer in Seattle, after all!). It did a fabulous job recommending gear clearly and understandably. It even recommended several different fishing pole brands: Shimano, Penn, Daiwa, Orvis, and Ugly Stik. As a consumer, that was fabulous. But if I was CEO of a fishing pole manufacturer not on that list. Oh (*&()&)#$. How do I get on that list?

AI will absolutely start replacing search--whether it's a future ChatGPT or some evolution of Google or something else, we will find out soon enough. Regardless of which company wins, it's clear the future of search in the coming years is generative AI. But if the AI doesn't know about your company, and consumers are using search to find out about you, now what?

Hold that thought for now.

AI Bans

Separately, when I am not coding, I spend a lot of my time talking to different companies about Polyverse Boost. A few of those conversations were fascinating. Three of the companies said some form of "we don't want to use AI as we are worried about our intellectual property." A fourth was worried about upcoming AI regulations and wanted to avoid doing something that would get them in trouble in the future.

If you haven't been deeply tracking the AI space, there is a legitimate reason to ask those questions and be thinking about that concern. AI tools fundamentally work by using data to train AI engines. Vastly oversimplifying, but more data tends to yield a smarter AI. In the fishing example above, somewhere, somehow, the team at OpenAI trained ChatGPT on a document that said that Shimano makes fishing poles. They may or may not have trained with a document about St. Croix fishing poles.

Training AI systems on marketing materials is fine. But what happens if the training data is something sensitive, like your medical history? Or if the data is supposed to be secret? There are reports of ChatGPT having Windows 10 activation keys, for instance (note, I have not personally verified this report!):

https://twitter.com/immasiddtweets/status/1669721470006857729?s=20

Going back to the concerns these four companies had, it clearly is a legitimate question to ask: I really would not want ChatGPT trained on my credit card number, for instance!

With these concerns, those four companies all took the simple but draconian approach of banning AI tools like ChatGPT and Microsoft's Github CoPilot.

Wow! A blanket ban is definitely an "easy" answer, but ultimately destructive. A more forward-looking competitor will use AI tools for marketing, internal productivity, and so forth. Those companies with bans are going to find themselves increasingly uncompetitive over time.

What should you do instead?

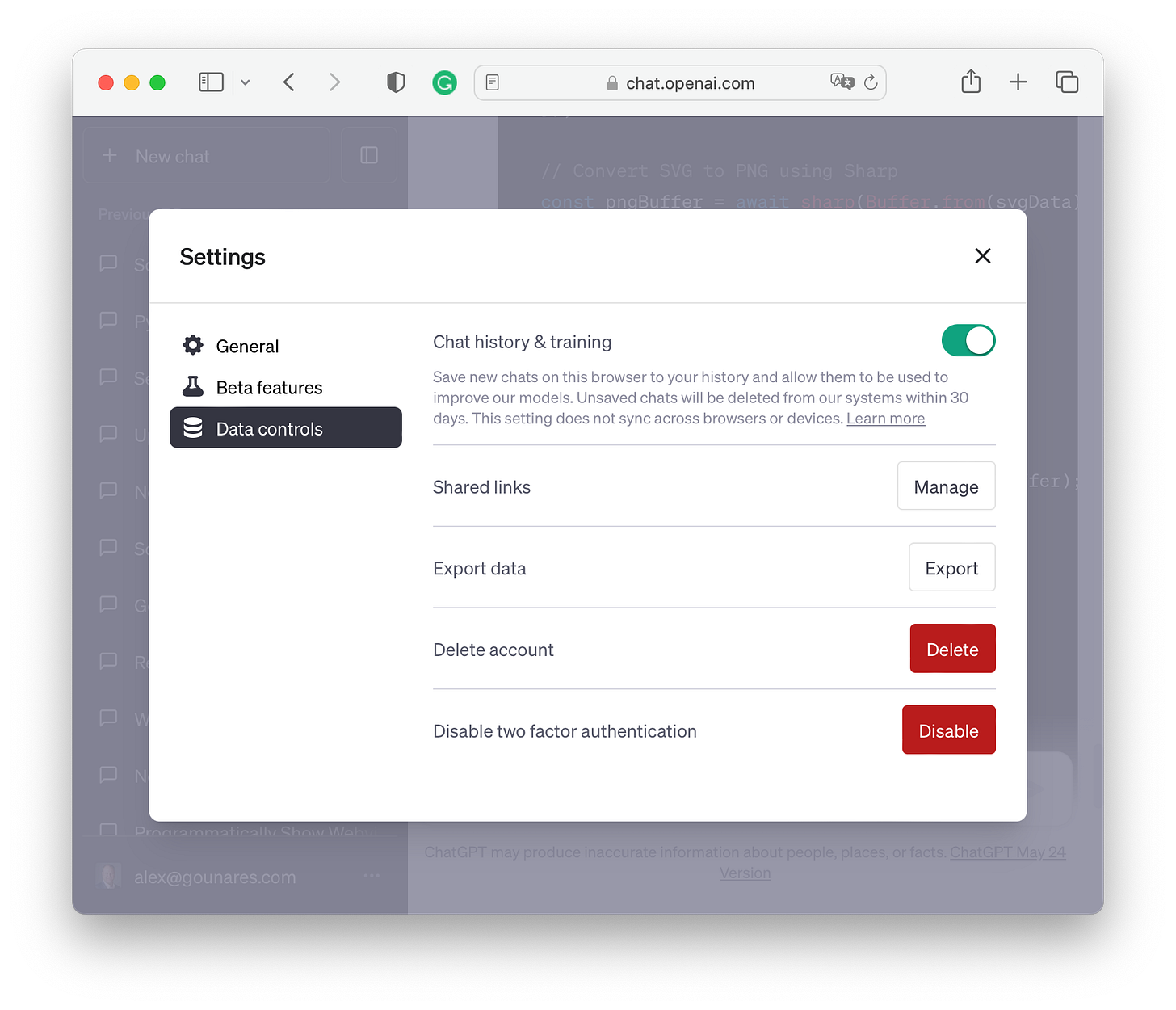

Unfortunately, there is no "one-size-fits-all" answer, and to make things more complicated, different tools have different rules for handling data used for training. At the time of this writing, for instance, Microsoft Github CoPilot says that Microsoft can use your company's code for future training of the CoPilot system. Conversely, many tools built on OpenAI (such as my own company's Boost product) guarantee that your data is kept private and not used for AI training outside of your organization. Other tools like ChatGPT offer privacy options where you can choose whether or not the data is kept private or can be used for training.

The privacy landscape will shortly become even more complex. On June 13th, OpenAI released its "function" API (https://openai.com/blog/function-calling-and-other-api-updates). It's hard to understate how important this capability is. In simple terms, it allows software developers and companies to integrate private data and code with the OpenAI GPT AI. Imagine the power of ChatGPT but with full knowledge of every document in your organization. This capability is a significant advance which I will discuss in future posts. But for today's purposes, it simply means there will be more hybrid AI solutions that keep confidential company information private while still harnessing the capabilities of ChatGPT's AI.

Regardless of the specific technology used, the first step in devising any AI policy is understanding the privacy features of any AI-based products you are considering.

Marketing

Marketing is the easiest and most obvious scenario for using AI tools. Just as you might invest in building an extensive website detailing your products and services, use AI tools to help create those materials! And remember, it's not just the marketing brochures. The more the AI deeply understands your product, the better.

Consider the fishing equipment example above. If you manufacture fishing equipment, the more that the AI understands the fishing poles, reels, lures, and your other products, the more likely the AI will give good results incorporating your product. Everything from how to use your fishing pole to what kinds of fish it will catch to how to do repairs will be helpful.

I experienced this firsthand in using ChatGPT earlier this year to help with some programming. The code I started off using was not understood well by the AI. When I switched to using functionality the AI knew, I experienced a 100x speedup in my productivity (https://www.thoughtfulbits.me/p/ai-fragile-systems-the-death-of-brittle)..

This effect will be so significant that I expect in the future, OpenAI and others will be able to charge for incorporating data into training sets.

In short, at some point in the near future, companies will pay to have their data understood by AI.

As a rule of thumb, if the information you are working on is something that you would want Google Search and Bing to know about, then it's a great idea to share that data with AI systems like ChatGPT.

Regulated Scenarios

Regulated scenarios, like medical information, financial information, and so on, introduce more complexity but are just as easy to reason about. If you would not post the data online, only use tools that guarantee privacy and confidentiality. Follow the same rules you currently follow for HIPAA, PCI, GDPR, etc., and treat AI tools like any other software tool.

It might be tempting to try to adopt a nuanced policy, e.g., using Microsoft Copilot (not private) on this code base is OK, but Copilot is not allowed on this other code base. While that kind of policy might be technically acceptable, in practice, people are still people and will make mistakes, forget the rules, etc. Keep your policies simple and clear!

Intellectual Property

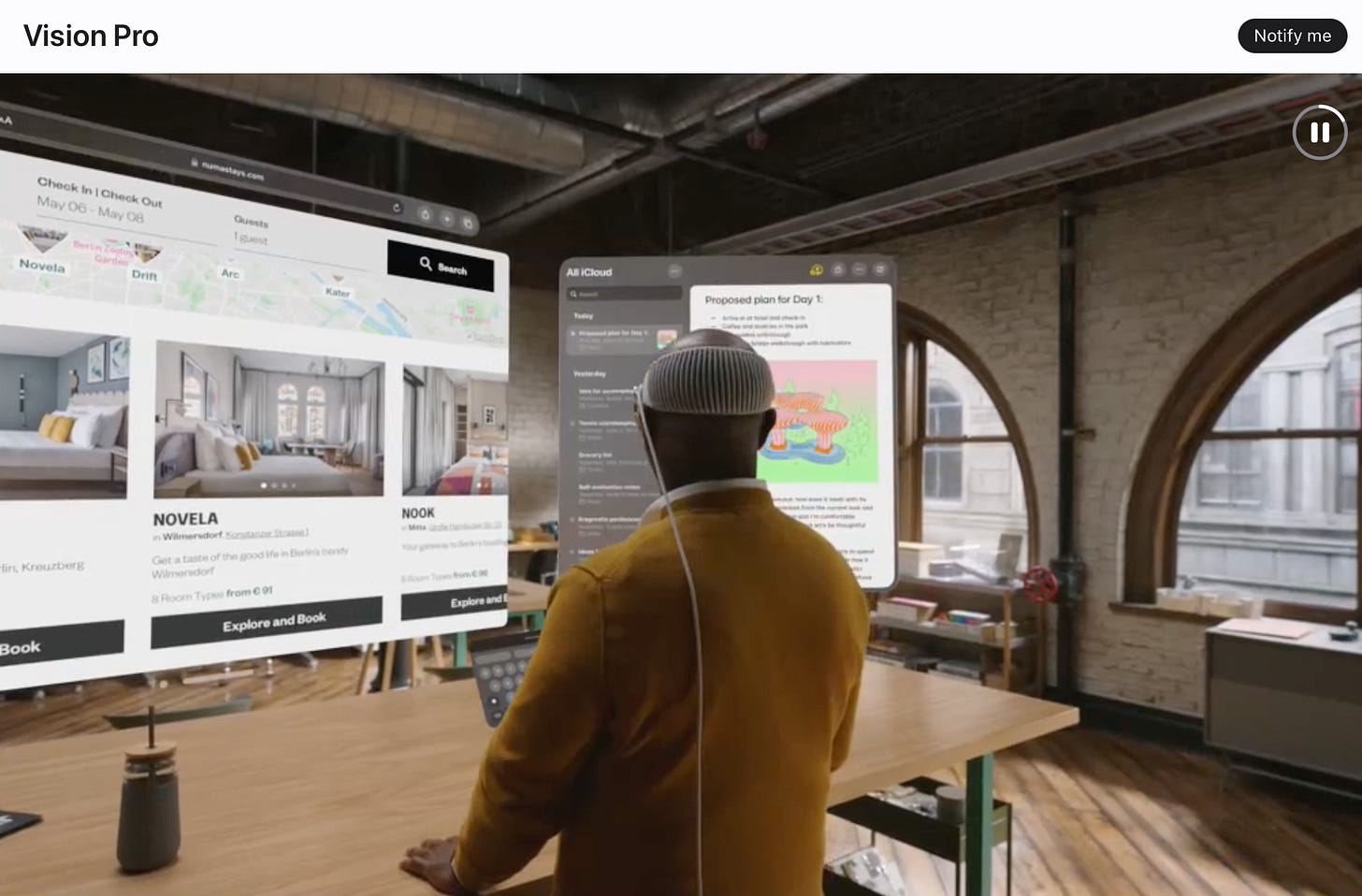

Handling intellectual property is the most complex scenario because the answer may change over time. Let's take Apple's recent launch of the VisionPro augmented reality glasses. https://www.apple.com/apple-vision-pro

I'm personally excited about this new device. I use a four-monitor setup in my main office for increased screen real estate. However, I travel frequently, and the tiny laptop screen (by comparison) is much more limiting and cumbersome. Vision Pro looks like it will really solve that scenario for me, maybe even to the level of making augmented reality my primary interface. We shall see--and much will depend on how well software developers are able to take advantage of this new capability.

Clearly, now that Apple has shipped this product, they should want OpenAI and others training AI systems on the SDK. As mentioned above, the more the AI understands your products, the better it will incorporate your products into its results.

However, what about everything in the past years while Apple developed the product? Apple is famous for trying to keep products secret (though admittedly, while the name Vision Pro was kept relatively secret, it was well known that Apple was working on glasses!). But leaks aside, I am pretty sure Apple does not want people asking ChatGPT, "What new products is Apple working on?" and getting back detailed answers!

However, the answer might not be as clear for your own company and products. If in doubt, I would bias toward using AI tools more aggressively. You will gain productivity wins as well as marketing wins once you have shipped the product. Yes, there may be a theoretical possibility that OpenAI or other systems train on your data before your product launches, and further that a competitor just so happens to run a query against ChatGPT to learn about your product, and even further that based on that learning that competitor is able to change plans fast enough to change their product and beat you to market.

Whew...that is a big "IF"! Weighed against the certainty of productivity wins--as the old saying goes: "A bird in the hand..." Or perhaps more relevantly (but for AI):