Is AI Overhyped?

The Trough of Disillusionment

AI is on a wild ride right now.

There is no escaping the hype and excitement around AI. Every day is filled with often breathless news about the latest advances.

But we’re also starting to see an increasing number of naysayers: many people from Gartner to Charlie Munger (Warren Buffet’s business partner) are saying that AI is overhyped.

The history of technology is filled with overhyped technologies that often fail to live up to the original promises (at least so far!): Bitcoin/blockchain, virtual and augmented reality (Microsoft Hololens anyone?), and perhaps most interestingly, AI itself. This is not the first time we’ve gotten into a hype cycle around AI–that happens pretty regularly every twenty years or so, starting back in the 1950s with the invention of the first programs that could play checkers and prove mathematical formulas: https://www.kdnuggets.com/2018/02/birth-ai-first-hype-cycle.html

On the flip side, from time to time, there are hyped technologies that end up changing the world–the personal computer, the Internet, and mobile phones, just to name a few.

So which is it for AI (this time!)?

The Trough of Disillusionment

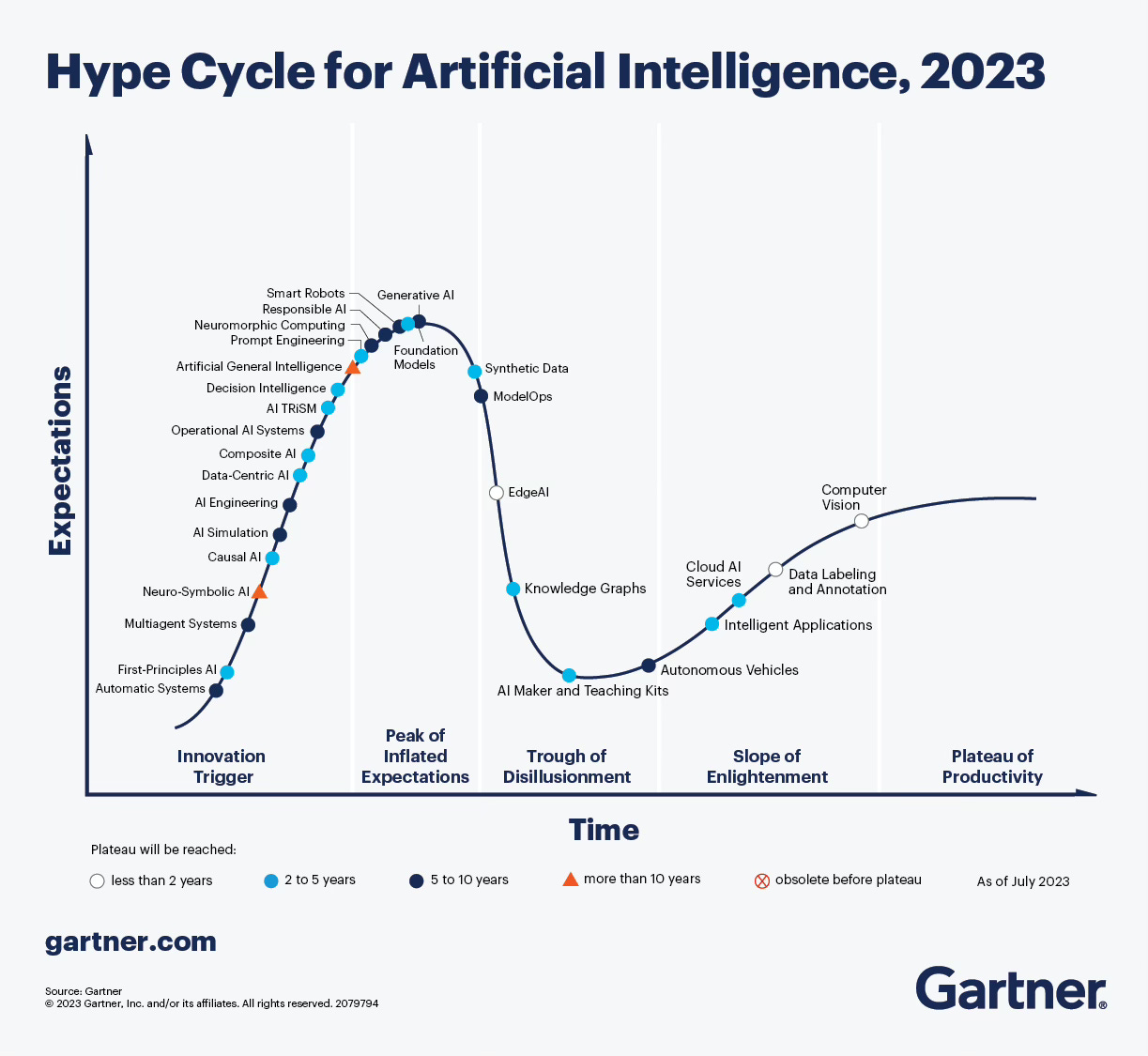

To set some context, nearly every popular technology goes through an initial period of euphoria and enthusiasm. Gartner popularized this phenomenon in their “Hype Cycle” model: The basic idea is simple–new technologies go through an initial phase of excitement and hype around the world-changing implications, but invariably, reality sets in: “Hey, I thought we were supposed to have flying cars by now!”.

Welcome to the trough of disillusionment. Few technologies immediately live up to the hype, and often, they take longer to really realize the value and original promise.

There are two fundamental drivers behind the hype cycle: why it takes longer for the promised benefits of a technology to materialize:

Humans don’t change as fast! The most successful and rapidly adopted technologies are ones that meld with existing tools, processes, and behaviors. Email, for example, was a faster version of the letters (and faxes) that people were already sending.

Many blockchain applications, on the other hand, have failed at that melding. An often-cited scenario promised for blockchain is title insurance for home buying.

If all property titles were stored in a publicly visible and auditable blockchain, there would be no need for title insurance (or at least, the expensive title insurance process as we know it today!) But even though blockchain has been around for over 15 years now, and this scenario has been dreamed about since then, it still hasn’t happened.

Quite simply, there are just too many existing moving parts and human processes involved with homebuying: you’d have to get local regulations to change, you’d have to convince homebuyers this new process was good, you’d have to move the existing property records into the new blockchain, and of course, you’d have to make the process of using and modifying blockchain tremendously simpler than it is today (the user experience for many blockchain applications is terrible, even today in 2023).

AI technologies will face similar challenges. Just because you can put a chatbot UI onto something doesn’t mean it’s the right experience to meet users where they are.

There will be a lot of experimentation and, by definition, failed experiments as technology vendors figure this out. We’re seeing this already in a few places–the first iteration of Microsoft’s Windows CoPilot, for instance, seems to have missed the mark: https://www.pcworld.com/article/1974843/windows-copilot-cant-decide-what-it-is.html Is a chat window really the right way to interact with the operating system? I’d argue the more an AI can just solve problems on its own, the better, at least at the operating system level! The OS should just work.

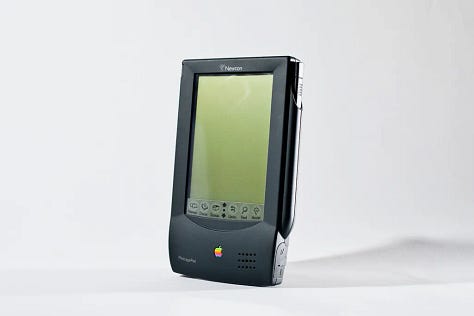

For AI, I’ve called this tension between a technology and the way humans work with that technology the “AI Gap.” AI technologies are racing ahead furiously, but humans are still humans. Winning AI products will bring AI seamlessly into existing processes and tools in a way that feels natural and comfortable to users. See my earlier note for more on this! https://www.thoughtfulbits.me/p/the-ai-gapThe technology simply isn’t ready. Tablet-style PCs have been a dream of technologists ever since the original Star Trek series in the late 1960’s. There were numerous attempts to build such a device, such as the Apple Newton in 1993. I personally worked on Microsoft’s Tablet PC in the early 2000’s.

Tablet PC Style Devices

To put it simply, in all of those previous attempts, the technology for tablets simply wasn’t ready. It wasn’t until Apple’s iPad that the combination of software and hardware progress was enough to make it a compelling device.

In the past few years, we saw the same challenge with augmented and virtual reality devices. Who wants to wear a bulky headset? Maybe–just maybe–the new Apple VisionPro headset will be advanced enough to make the breakthrough (I’m hopeful!), but that’s a topic for a different post!

With respect to the new generative AI technologies, there is no doubt the technology is stunningly impressive. But, it also still suffers from the hallucination problem (i.e. the AI will generate ‘made up’ answers). Unless products are designed with hallucination in mind, they could readily fall flat with end users.

What about AI now?

While AI broadly defined has been widely adopted in recent years in scenarios as diverse as Siri voice recognition, Tesla Autopilot, and credit card fraud detection schemes, ChatGPT (and more broadly generative AI) has captured the world’s imagination since its introduction–still less than a year ago!

Overhyped, or not?

My own view: not.

Of course, there will be articles written that are clearly overhyping the technology and downplaying the risks. But broadly, I think we (collectively) are underestimating the impact.

It’s Amara’s Law: humans tend to overestimate the impact of new technology in the short run and underestimate it in the long run.

We are all familiar with Moore’s Law: the idea that computers double in processing capability every two years (and to be fair, the original formulation of Moore’s Law was about transistor count, but this is a reasonable approximation). As a result of Moore’s Law, the Oura fitness and health ring that I wear has more processing power than one of the original Cray supercomputers, costing over $30 million dollars in today’s dollars!

That is the result of doubling every two years.

However, the underlying models behind AI are improving at a rate of 750x every two years. https://medium.com/riselab/ai-and-memory-wall-2cb4265cb0b8

750x. 🤯

It’s truly outstanding. Of course, technical readers may debate the exact math behind the 750x calculation, but it doesn’t change what’s going on.

Just look at the pace of progress with ChatGPT–at first, it could just write back text. That alone was stunning.

Then, this summer, it could automatically do mathematical analysis and execute code. Now, it can recognize and manipulate images.

All of this in less than a year! If you haven’t seen some of the new ChatGPT vision features, this post is well worth the read: https://openai.com/blog/chatgpt-can-now-see-hear-and-speak

At some point, we’ll see a slowdown, but at least in the short run, I expect the pace of progress to continue. Fundamentally, AI quality is driven by “more data and more compute.” Thanks to the investments being made in AI ($13 billion from Microsoft!), AI is getting both more computing and more data!

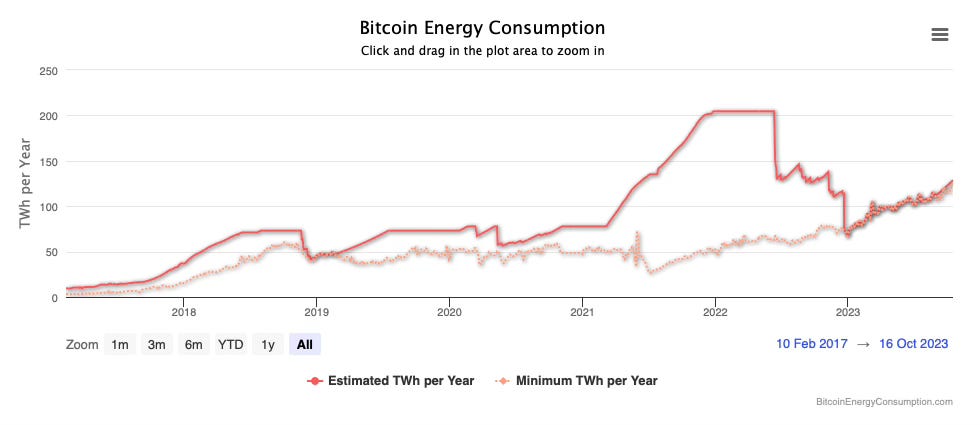

But one could have looked at an investment chart for Bitcoin and blockchain technologies in recent years and seen something similar. For example, the energy consumption of Bitcoin skyrocketed in 2021 and 2022. Just like AI, there was a ton of investment in Bitcoin technologies a few years ago.

However, the crucial difference is the impact on day-to-day life and work. I still program professionally for my day job. My productivity has gone through the roof with AI tools–I simply can’t imagine writing software without AI now; it would be too painful!

Similarly, I’m now making social media videos: https://www.tiktok.com/@boostedcoder. I use a series of AI tools (e.g., auto-captions) to make the process quick and painless. Again–without AI, I would not have the time to do all of this.

I am sure each of you has a story of how you might have used ChatGPT yourself or know of friends who have. It’s been pervasive--everything from helping kids with their homework to salespeople selling to programmers writing code.

That’s just with the tools we have now. Nearly every software company I know is working through how to incorporate AI into their products. While not every one of these attempts will be a knockout success, what happens in 2024 when every piece of software you are using becomes dramatically more capable thanks to AI?

Bottom line: AI is underhyped. Buckle up!