My blog post, Accenture: Dead Man Walking, stirred up quite a conversation. The question that kept surfacing was - who will thrive in this brave new world of AI, and who will struggle and inevitably be disrupted? I want to share a simple framework for how to think through this.

To set a foundation, however, it's vital to appreciate the mind-boggling pace of AI innovation. We are experiencing an exponential growth curve in capability. Exponential curves can be hard to reason about; people overestimate them in the short run and vastly underestimate them in the long run. But with AI advances, it's easy to even underestimate in the short run!

Take AI as applied to legal scenarios, for instance. I touched on this briefly in my earlier note, but let’s explore it in more detail.

AI has been used for a while now in software products for lawyers. Looking at what was available a year ago, many of these tools were impressive but essentially just a step above grammar and spell checkers. A popular tool, for instance, could automatically detect inconsistencies in legal language and suggest redlines. Useful, to be sure, but just the beginning.

Then in November 2022, ChatGPT launched, and it was good enough to pass the legal bar (a qualification test for lawyers). We seemed to have skipped the whole Turing Test idea and went straight to passing the bar!

But it just barely passed--in the bottom 10%. Think Saul Goodman from the Breaking Bad series. Sure, he's a lawyer, but maybe not the one you want to hire!

However, by March of 2023, ChatGPT4.0 passed the bar in the top 10%.

Wow. Definitely Harvey Specter level!

There is more coming. This spring, OpenAI introduced a new technology called 'plugins.' These got less press as, for now, they are pretty technical. But the net effect is that plugins allow developers to augment ChatGPT with their own data, knowledge, and algorithms (such as every contract and other legal documents in your company). By the end of the year, state-of-the-art legal tools will combine the smarts of a top-rated lawyer with perfect knowledge and memory of every legally relevant document for your organization.

How well do your current attornies remember obscure details from ten-year-old contracts?

Innovation vs Adoption

However, regardless of AI's exponential advancements, it will invariably confront adoption barriers in the real world. For example, in the Accenture post, I talked about how Accenture is facing financial and cultural adoption challenges.

There can also be regulatory or politically driven hurdles. For example, the schools where I live in Seattle have chosen to ban ChatGPT. Fear can also play a role in slowing down adoption. Apple arguably is the technology company in most need of an AI boost--Siri, once a fun breakthrough, now feels like using a manual typewriter compared to ChatGPT! Yet Apple recently decided to bury their collective heads in the sand and ban ChatGPT, despite the new IP protections offered by OpenAI.

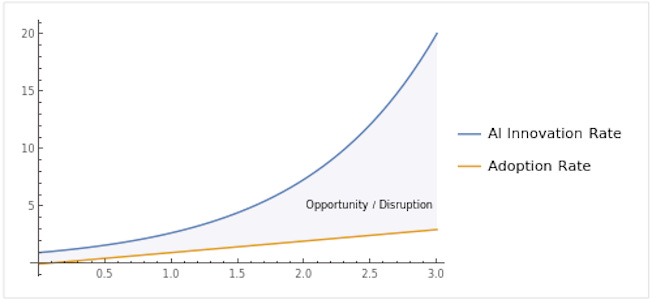

If we distill the current situation into a simple graph, we see AI innovation advancing exponentially while real-world adoption can trail behind in a more linear progression. The gap between these two trajectories is what I call the "AI Gap."

To use this chart, take the industry or company of interest and try to map out innovation versus adoption. If there is a gap, it represents both an opportunity and a threat, depending on which side you look at. If your organization lags in AI adoption, this gap becomes a significant risk, an area primed for someone else to leverage AI and disrupt your operations.

Let's apply this principle to several key sectors:

Education

As you can see from the actions of the Seattle Public Schools and other schools nationwide, AI triggers a very polarized reaction for educators. Supporters argue that every student now can have a personal, infinitely patient tutor. For schools with high student-to-teacher ratios, this could be a game changer. Detractors voice concerns about AI doing the heavy lifting, thus rendering students passive button clickers who are failing to learn key skills and concepts.

This debate rightfully slows down the decision-making process for many schools (rightfully, as public debate and the eventual consensus are the heart of democratic processes!). But, if you are an entrepreneur wanting to innovate in AI education software, the path to victory for AI in education lies in directly appealing to individual students, not in persuading school boards mired in their lengthy processes. We may even witness the rise of private universities offering affordable, AI-powered education.

Billable Hour Businesses

My earlier post talked at length about Accenture, but the issues apply to almost any other business that sells knowledge work by the hour: many marketing firms, consultants, lawyers, outsourced software development, etc. These companies face a double challenge: if their revenues depend on the number of hours worked, AI-led productivity will reduce those hours. But as AI continues to increase in capability, the billable hours for the same job will decrease as well.

Consider the video creation aspect of marketing. There are new 'text to video' tools, such as synthesia.io and runway.ml. These tools can literally take a text-like prompt (ala ChatGPT) and then automatically create a full video. The clip below is worth watching!

While they are rough today--but good enough for quick social media posts, training videos, and the like--that these tools even exist is downright mind-boggling. What used to cost thousands of dollars can now be done in seconds. These tools will continue to improve rapidly, and as they do, the more that AI will eat into the traditional work of video creators.

It's too simplistic and, I think, incorrect to look at these tools and say, "oh, human designers, human videographers, etc., are going away." While an AI can generate thousands of images, logos and videos, and so on, those images are not necessarily very good.

While my technical readers may object to this oversimplification, a handy way to look at ChatGPT and similar AIs is that they have been trained on billions of documents from the internet, including countless marketing messages. Thus, all things being equal, the AI will generate "average" marketing material. Unfortunately, that average piece of marketing material is awful or, at best, unmemorable.

That's where the AI gap comes into play. Talented marketing professionals (and, for that matter, lawyers, engineers, and other 'billable hour' knowledge workers) who take advantage of AI tools will see their productivity and creativity skyrocket. The demand for marketing is not going to go away after all, as companies still need ways to tell the world about their products and services. Similarly, software is behind just about every device and gadget in our modern society; the need for software is not going away either. And for better or worse, I think society will need lawyers for centuries to come!

Thus, with the demand for these services, there will still be a business in providing them. The winners will be the talented individuals and teams that take advantage of AI tools. The losers will be those unable to adapt or adapt quickly enough to take advantage of the gains. I listed several reasons for this above--public companies bound by revenue targets are an easy example.

But other adoption obstacles can be more subtle and harder to measure. An engineer at a large technology company recently griped that while AI made him more productive in his own coding, that gain was irrelevant as actually deploying the new code took weeks of discussions and code reviews across numerous teams.

Culture matters!

Healthcare

AI is already used extensively in medical research, from drug discovery to X-ray imaging analysis. And certainly, many aspects of the healthcare market are extensively regulated--obvious candidates for slowing the adoption of AI-driven technologies. The AI-gap framework readily applies in this space as well.

However, we may find an interesting tipping point in healthcare in the coming years when the innovation versus adoption dynamic will shift radically. Consider this quote from Peter Diamandis (a world-renowned scientist and founder of X-Prize Foundation and Singularity University):

https://twitter.com/PeterDiamandis/status/1651025790090502144

Within 5 years, it will become malpractice for a physician to diagnose a patient without an AI advisor in the loop.

This is a fascinating and thought-provoking tweet. I suspect Peter is right. At some point, health insurance companies will look at their data and say: "Wow, AI-assisted doctors make fewer mistakes than unassisted doctors!" It may take a while, but the actuarial math will be unavoidable at some point. Once that happens, the dynamics of the entire market will shift.

The same thing will happen with AI-assisted driving, like Tesla's Full Self Driving. The safety record so far is dramatic. How long before auto insurance companies start charging different rates depending on whether a car has AI or not?

If we pop up a level, this illustrates a very important point: barriers to adoption are not static--they can change. One thing I like about the insurance business is that it is a very predictable business over the long run. Math will win in the end! So while the industry may be frustrating at any given point in time (just ask anyone trying to get insurance coverage for the new weight loss medications Wegovy and Mounjaro), if that data favors change, the change will eventually happen. So for everyone wanting the new weight loss medications, have faith! Eventually, somebody in the industry will realize that Wegovy is cheaper than heart bypass surgery!

Regulatory changes can have profound downstream consequences. We don't have supersonic coast-to-coast commercial airplane flights because of a 1973 rule banning supersonic travel (versus banning a specific sound level regardless of speed). At some point, a forward-looking FAA administrator will make a tiny rule change, and within a few years, it will be possible to fly from LA to New York just to have supper.

To go back to our earlier discussion on education, what happens if the Common Core State Standards group adds "AI proficiency" to the standard for high school education in the United States?

Jobs to Be Done (JTBD)

To close out the discussion for this note, another way to look at winners and losers in the AI space is to ignore AI altogether and instead use the "Jobs to Be Done" lens.

The late Clay Christensen (of Innovator's Dilemma fame) popularized and developed this idea. It's quite simple in framing:

"Your customers are not buying your products. They are hiring them to get a job done."

CLAY CHRISTENSEN Harvard Business School

Many companies will talk about being "customer-focused," but I like the more nuanced and holistic approach in JBTD. Just think of products you might have that are chock full of features with more and more coming every year, yet fail in the most basic job of solving what the customer really wants.

The industry that I'm in, cybersecurity, is arguably one of the worst offenders here. Collectively, we sell billions of dollars of complex products, forcing our customers to become cybersecurity experts. Everyone outside of our industry just wants a computer that works and can't be hacked.

All other things being equal, I would bet that a company focused on JBTD will outperform one that isn't in any given market. JBTD applies to the AI gap as well. If there is a gap between what AI can do in any given field and what customers are adopting, what are those barriers? Those barriers are effectively part of the "job to be done" that the customer is wrestling with, rightly or wrongly.

In this mindset, the question then becomes: what can AI do to help with the actual job the customer is trying to do?

As I discussed in my post on Accenture, regulatory compliance is an excellent example of this JBTD opportunity. For any company or product with compliance requirements, those requirements are unavoidable. Thus, AI tools that make compliance easier, quicker, or more accurate for those markets are likely to be more successful than those that don't.

At the end of the day, customers matter! Check out https://www.thrv.com for some great resources on the JBTD methodology if you are interested in learning more.