Why you'll buy a new PC every year soon

The return of client-side computing.

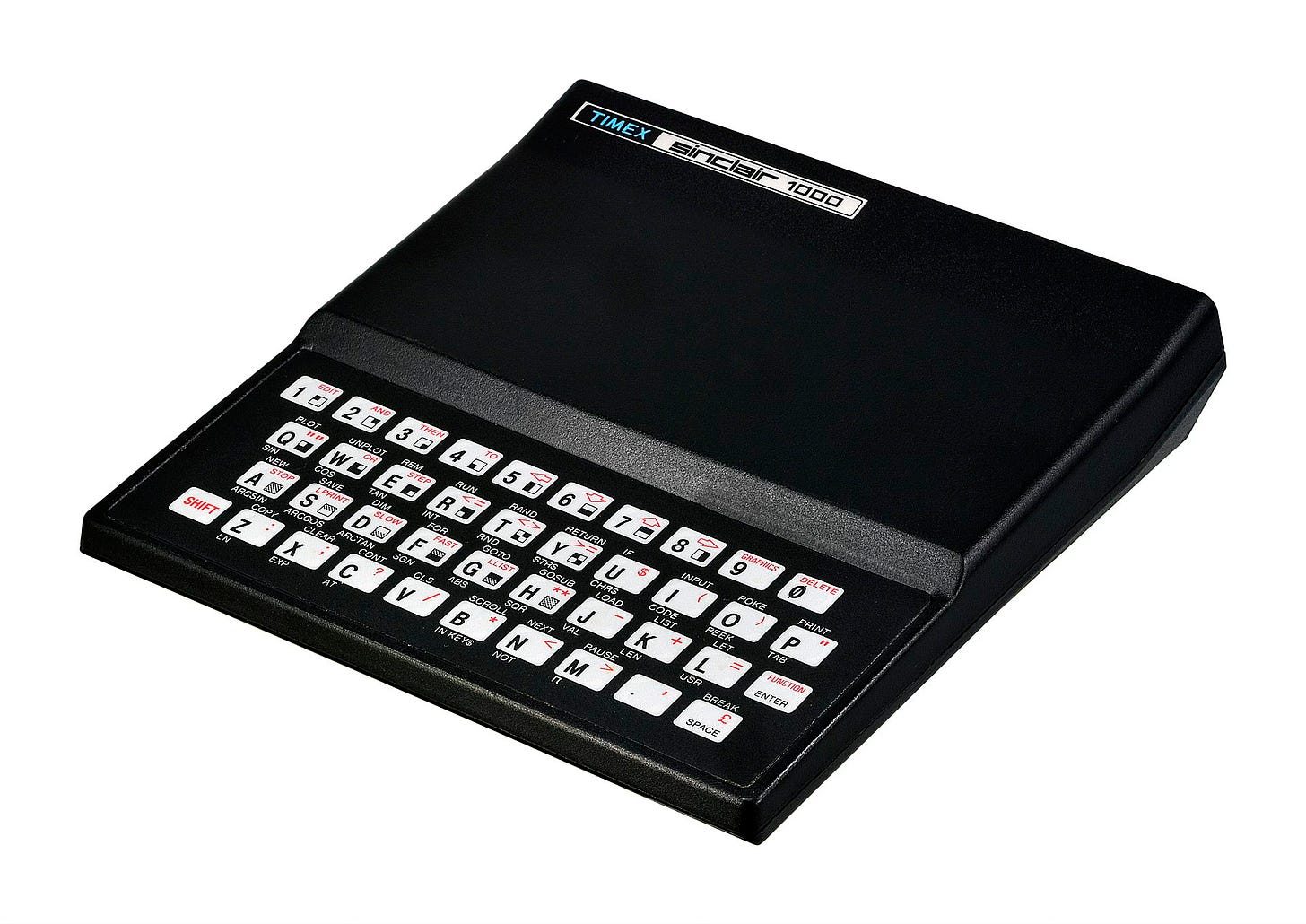

My first PC was a Timex Sinclair 1000.

It had a whopping 2 KB of RAM and a tape cassette for the hard drive! My first program was "Hello World!" of course, followed shortly after that by my first video game: a dot that you could move and shoot other dots!

10 PRINT "HELLO WORLD!"

20 GOTO 10In the years that followed, we saw an incredible rise in computing capabilities. Nearly every year brought some new marvel: the first color computer, the first printers, the first laser printer, and the first video and sound cards. Successful generations of chips, from the 286 to 386 to 486 to Pentium yielded faster and faster computers. These advances felt real and tangible to users. The latest and greatest technology often opened up new scenarios that were impossible before, be it printing a color photo or playing a 3D-rendered video game like Doom.

By 2010, though, this race of ever more amazing client-side computing experiences felt like it had come to an end. While the underlying chip and hardware technology continued to advance, it didn't "feel" as spectacular as it used to. Just ask yourself: for day-to-day computing tasks--browsing the web, doing email, writing a paper--how different does your PC feel today versus, say, five years ago or even ten years ago (setting aside AI! I'll come back to that!)

To be fair, there are certainly more specialized scenarios like computer programming or video editing where your PC's capabilities make a huge difference! The new M-series chips powering Apple's new Macbooks and iMacs are phenomenal at tasks like video editing. Editing a long, 4k video on a Macbook M3 is smooth and silky--something completely unthinkable even a few years ago on an Intel-based laptop.

But for most of us, the razzle-dazzle of new hardware enabling dramatically new experiences ended a decade or more ago.

This is about to change and change in a big way soon. AI is the driving force.

Fundamentally, AI is about computing power. Add more computing power, the AI gets better and smarter. A smarter AI will write better code, suggest better text for emails and presentations, and will create more realistic pictures.

Of course, at any given moment in time, there will be a lot of innovation and differences between specific AI algorithms and technologies, and many people are working on improving the performance of AI tools. At Polyverse, the upcoming release of our Boost AI tool for software developers is literally 100 times faster than the version we had last year, all thanks to algorithm and architecture improvements.

But even with those kinds of improvements, the fundamental principle still holds--at the macro level, the more computing power we put into AI, the more we'll get out of it.

This principle will be particularly true in the coming era of agent-based AI. Right now, AI technologies are used primarily in a reactive sense. ChatGPT is a great example—it's brilliant, but it only responds when you ask it a question. Credit card fraud algorithms run when you attempt a transaction. Email anti-spam filters run when a new email comes in.

In the upcoming agent model, an AI will constantly run in the background, proactively working on your behalf. It will continuously read your emails and work 'todo' lists, and then it will start on all those work items. When you get into work, the AI has already done a big chunk of your tasks. Similarly, an AI will constantly scour the Internet looking for things it knows will interest you, be it a news article or an upcoming rock concert.

These agent scenarios will be transformative but will need even more computing power!

If we need more computing power to run an AI (or, more likely, multiple AI systems) 24/7 for each of us, where will all this computing power come from?

The simple approach would be to build more and more data centers.

This data center build-out is already happening. Microsoft, Google, Amazon, and others are investing billions and billions to do so.

However, I predict the world will want more.

If you get increasing returns with more computing power, there will be a push to do more and more computing. It's basic economics.

Unfortunately, there will be a limit to how rapidly new data centers can be built. While there are many complexities, the fundamental issue right now is insufficient electrical power! This is driving leading innovators to invest in fusion power and other breakthrough power sources. This article is worth a read: https://futurism.com/sam-altman-energy-breakthrough.

A large-scale data center can consume hundreds of megawatts of power, and much of the power generation capability in the world is already in use or allocated. Thus, building new data centers to meet the demand will also necessitate building new power plants.

It's going to happen, but it's going to take time. Power plants take more time to construct than data centers and have significantly more regulatory overhead.

Thus, if there is a constraint to new power generation and, therefore, new data center construction, how will the world meet the demand for AI computation?

My bet: client side computing power (or edge and device computing power) if you prefer.

For example, the latest Apple computers with Apple CPUs are exceptionally good at running AI jobs. Just watch this video of an AI running entirely on a Mac laptop to generate code. It's basically ChatGPT "lite,” running on a laptop!

Client-side AI has several advantages.

You already have a client-side device--actually, many client-side devices: your laptop, phone, watch, and even your TV!

There is already power "allocated" for these devices--no new power plants are needed.

AI running locally can safely process confidential corporate data.

Will the client-side AI become as powerful as the exascale cloud AIs from OpenAI and others?

Probably not. But it doesn't matter!

It will not become an "either-or" choice of client or cloud. One of the cool things about AI technology is that you can essentially "mix and match" and combine different techniques together (indeed, this is how ChatGPT itself works on the inside). Local, client-side AIs can be combined in a hybrid fashion with the more sophisticated cloud AIs.

Consider, for example, AI-powered email software in the near future. The email client running on your laptop might use the "cheap" AI to weed out spam emails, summarize newsletters and marketing emails, and then rely on the premium, cloud-based AI to help you compose a new email to your boss. It's a hybrid solution, taking advantage of all the processing power available, both cloud and local.

Think of this like running a restaurant. Yes, you could hire Gordon Ramsay to do *everything* in your restaurant, from grocery shopping to chopping onions to making the meals, running the cash register, and washing the dishes. But Gordon Ramsay is famous--and expensive! A restaurant would go broke if the executive chef did all that work.

The premium, exascale AIs such as OpenAI are like Gordon Ramsay. Top-notch and constantly getting better, but with a price to match. Just as a restaurant would hire other, more junior staff to buy the food, prep the onions, clean the dishes, and so forth, the same is going to be true for AI solutions in the future. AI solutions will increasingly be hybrid solutions using the available computing power as cost-effectively as possible, both client-side and in the cloud.

Where this gets exciting is what might happen with devices going forward. I think we will see a resurgence of innovation in devices for your home and office.

The obvious example is the laptop or desktop you are using day to day. Intel will, one way or the other, catch up to Apple's silicon, but regardless, there will soon be a day when you are eager to upgrade your PC to get the email AI working faster and more accurately through your massive email backlog.

But it's not going to stop there. When was the last time you bought a TV? Why did you buy it? Maybe you bought a 4k TV a few years ago to watch the Super Bowl. But if you have a decent flat screen today, what would compel you to get a new one? Sharper colors with an OLED vs your current TV. Eh...maybe, but <yawn> probably not.

What if a new TV had a built-in AI assistant running locally (so no expensive subscriptions, or at worst, a relatively cheap subscription)? With an actual screen, this AI assistant could be seen and interact with you--you could have a 3d rendered "AI person" talking to you, not just the disembodied voice of today's Alexa devices.

Of course, there are more AI scenarios than just assistants! I would love to have an AI picture frame on my wall that could show photos of my kids and generate new AI artwork. The exercise equipment at my gym could have an AI screen showing me a real-time generated video of how I could improve my form or otherwise do better. AI in stores and offices could help monitor security cameras or suggest when to send staff to help shoppers who need help.

It is not just home scenarios where we will see a resurgence in client-side computing. I suspect we will also see a comeback of the corporate data center. While there are substantial advantages to having a lot of corporate computing in the cloud, that cost equation shifts for heavy and predictable workloads. The team at Basecamp wrote an excellent series of articles going over the cost savings--and this is without AI features driving demand. They saved millions of dollars a year by moving off of Amazon.

As AI technologies improve and deliver more and more value to companies, I expect many companies will look at the benefits and ask the question: what if we had AI agents running 24/7 for all aspects of our business--marketing, back office, customer support, etc?

For many industries, AI is going to reshape the entire way companies interact with their customers. We are starting to see this already, as B2B software companies are beginning to add AI-driven "upsell" features (e.g., "We noticed you are doing a lot of X--if you buy the special X-add-on-pack to Office 9000, we can help you with that").

I expect most of these corporate scenarios to be solved with hybrid AI architectures--taking advantage of the latest and best exascale AI with the bulk lifting done by cheap, on-premise AIs. Hybrid architectures will be particularly important when the AI needs to access and interact with sensitive corporate data, such as customer data or proprietary intellectual property.

While all of these scenarios from the home to the office could be done entirely with cloud-side AI (after all, the cloud is just computers owned by somebody else!), I think the benefits of AI are going to drive the push for more computing power and cheaper. The cheaper I can run AI, the more value I can get from it. I am willing to pay $100 for my AI photo frame, but I'm definitely not interested in paying $1000. Similarly, if I can use AI for an upsell marketing campaign, it will have to be done within a specific marketing budget.

In all of these cases, the demand will be pushing hybrid architectures and, with that, client-side computing.

As computing hardware continues its relentless advance (e.g., the newest Mac M3 chips are over twice as fast as the original M1 chips in only three years), there will be renewed value and ROI for being on faster upgrade cycles.

The only question left is which hardware companies will deliver these breakthrough scenarios. Dell? Vizio? Samsung? Apple?

In the meantime, it's time to save up for a new TV!